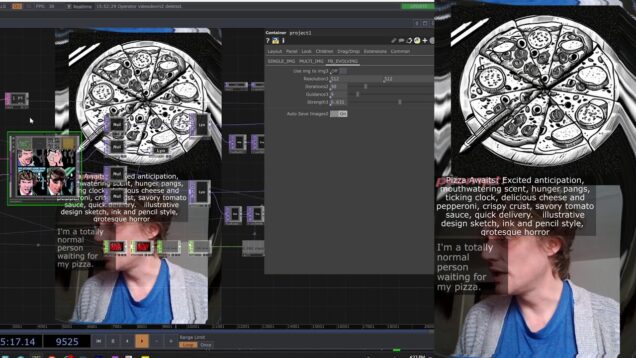

Stream Diffusion Audioreactive in Touchdesigner

Heut probieren wir uns mal in Touchdesigner mit Streamdiffusion aus. Hoffentlich mit guten Techno etc. Folgt mir gern auf Twitch & co: https://www.instagram.com/mrshiftglitch/ https://www.twitch.tv/mrshiftglitch @mr.shiftglitch

![Touchdesigner & Streamdiffusion in REALTIME mit Live Input! [Stable Diffusion in TD]](https://alltd.org/wp-content/uploads/2024/04/touchdesigner-streamdiffusion-in-636x358.jpg)