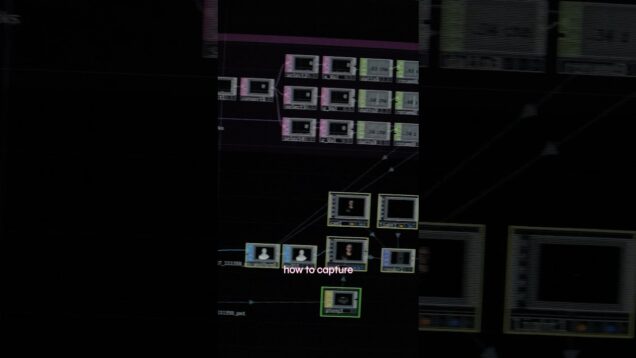

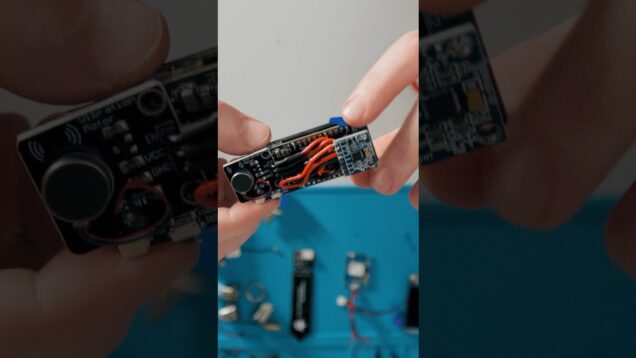

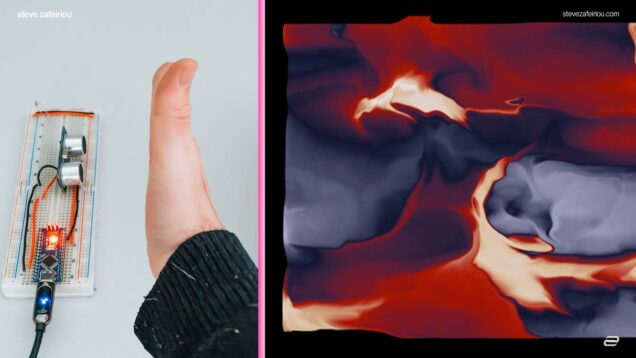

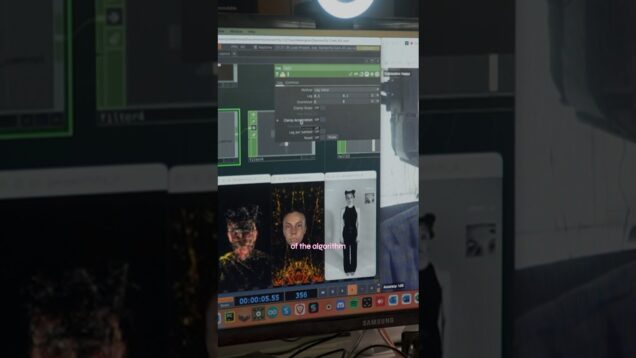

I built a motion tracking system for Touchdesigner

A tutorial and process of how I built a custom motion tracking controller for Touchdesigner. 👉 Written in-depth guide: https://stevezafeiriou.com/how-to-send-sensor-data-to-touchdesigner/ 👉 Setup guide & functionality of the MPU6050: https://stevezafeiriou.com/mpu6050-sensor-setup/ 🥳 Project files on GitHub (code, schematics, 3d models): https://github.com/stevezafeiriou/sensor-data-td (You can use this system for multiple use-cases, from IoT to VR controllers etc.) 🔥 Try […]